With over 150 million monthly active users in Europe, the majority aged 18-34, TikTok offers tremendous opportunities for charities to achieve reach and authentic engagement, particularly with younger audiences. In this guide, we will cover the steps you can take to keep your audience safe and share simple strategies to manage negativity and trolling behaviour on TikTok.

StrawberrySocial is a social media moderation agency specialising in brand protection, online safety and community engagement for charities. Our team of experts have the knowledge and skills to protect, moderate and engage with online audiences 24/7/365. We are proud to work with leading brands and charities including NHS England, NSPCC, RNIB, Just Eat, SuperAwesome, Which? and Samaritans.

Understanding the challenges

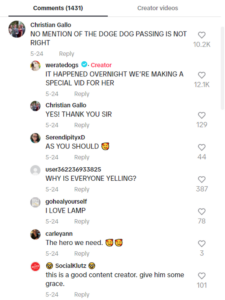

Common types of negative interactions on TikTok include trolling, cyberbullying, scams, and spam.

- Trolling on TikTok can involve users posting inflammatory comments designed to upset or provoke others, disrupting the platform’s community and undermining positive interactions

- Cyberbullying on TikTok involves individuals or groups targeting users through hurtful comments, duets, and stitches that mock victims, often causing significant emotional distress

- TikTok spam comes in the form of mass-produced, irrelevant, or harmful messages, which clutter users’ feeds and can include scams or malicious links

For charities especially, these negative interactions can lead to reputational damage and a loss of trust, impact fundraising efforts and ultimately undermine the charity’s mission. Persistent online harassment can also demoralise staff and volunteers, reduce engagement and create a toxic environment for users.

Types of content

Content that can attract trolls includes controversial topics, political discussions, personal opinions, sensitive issues such as race, gender, and religion, and posts that showcase personal achievements or vulnerabilities.

On a platform like TikTok, younger audiences appreciate and seek out authenticity, intersectionality and representation, so, if your charity seeks to truly connect with them, it is almost impossible to avoid trolls by avoiding these content types altogether.

Proactive ways to reduce chances of trolls and manage negativity

- Set clear guidelines and communicate them regularly and in an accessible way – being creative in the way you layout and enforce them can be a way to get more compliance.

- Designate moderators and train staff and volunteers using a structured process that all can follow.

- Use moderation tools to filter and review comments before they are posted – this helps to ensure nothing is missed and also is more time beneficial.

- Monitor engagement and identify trends in negative behaviour – use this information to inform your future content and engagement.

- Familiarise yourself with best practices and guidelines for community moderation on TikTok.

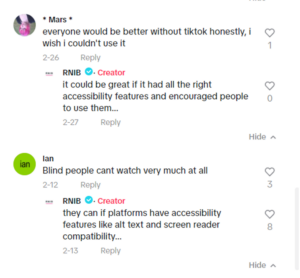

Encourage positive engagement

Actively engaging with your audience and using language that promotes respectful discourse can help mitigate the impact of trolls and negative comments. Encouraging constructive feedback and highlighting positive contributions can also help to shift the focus away from negativity. Actively reward or promote positive and worthwhile comments/UGC.

Stitching and dueting public content is a wonderful way to get your community engaged and sharing your mission. But it does come with a downside. Anyone can stitch or duet your content if your account is set to public and your privacy settings are set to ‘Everyone’.

You won’t be able to stop every stitch or duet, but schedule in some time to do quick hashtag searches for possible problems. If it becomes a bigger problem you can always delete all duets and/or stitches from a video’s privacy settings.

Schedule regular spot-checks for impersonation accounts

A tactic often employed by scammers is to recreate the public username of a charity in order to send DMs from these accounts to followers. These scammers will ask users to go to another platform (such as Telegram) to speak directly, or to donate.

Schedule in regular spot-checks for impersonation accounts and report to TikTok. In addition, your channel information should include notes for your followers so that they will be able to identify potential scammers trying to impersonate your official page. Encourage your followers to report them too.

How to handle negative interactions when they happen

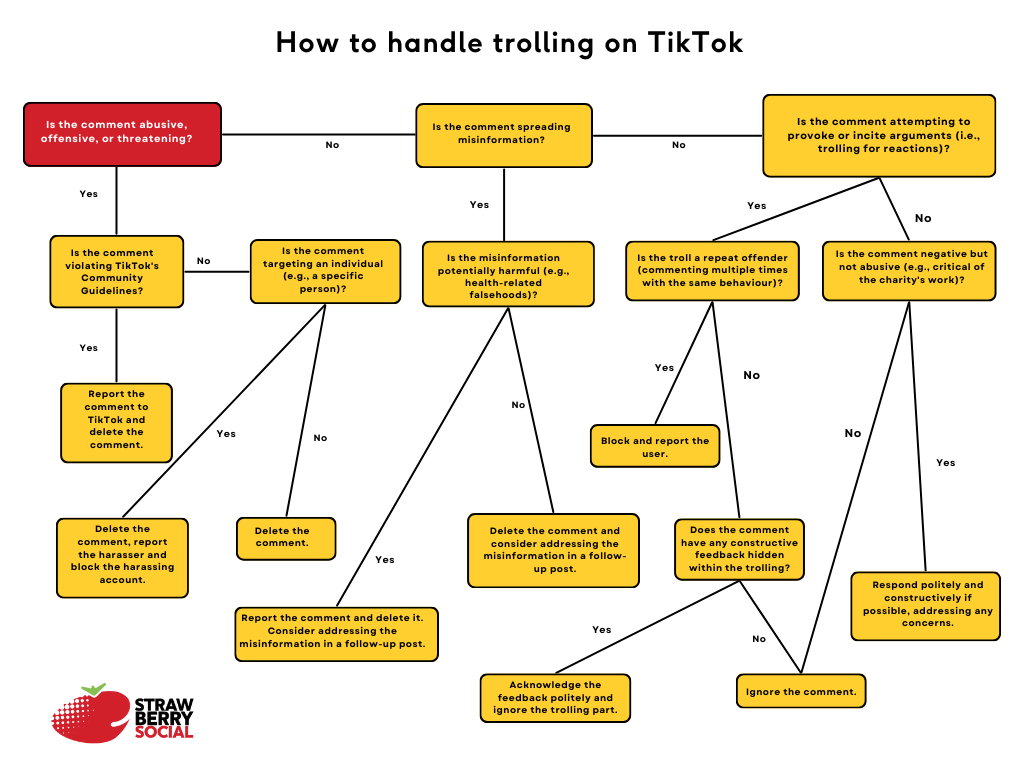

Prepare guidelines on when and how to respond to negative comments constructively, when to delete, and when to report and block a user. For example:

- Abusive, offensive, or threatening comments: Report, delete, and block as needed

- Misinformation: Delete, report if harmful, and consider addressing it publicly

- Provocative trolling: Block repeat offenders, ignore or respond to others based on context (sometimes it’s better to just ignore and let them fade away due to lack of attention)

- Constructive criticism: Respond with facts – calmly, politely and constructively

We’ve created a decision tree to help you.